dwsuccess

Tuesday, November 22, 2005

Monday, November 21, 2005

Saturday, November 19, 2005

给姚明出主意:

姚老大:你的问题不在技术上和体能上,把那些评论家的垃圾话忘了吧。你的问题是:

(1) 火箭只有技战术核心-你,但没有真正的精神核心-姚明(大概是因为麦蒂吧)。你还没有统率你的队员按照一个统一的思路打球,打出真正的篮球乐趣,大家都在各自为战!谁的错,不是范的,是你的!你现在还没有在心理上统率球队。我是打街球的人,寻找统帅其实是每个人的一种本能,在沮丧的时候,他们需要心理支撑,如果有,他们就会知道什么时候该干什么。你就会发现其实你身边的人都就全明星的水平!如何获得呢,你那么好的数据为什么就不能成为他们的精神依靠呢?因为你在这个时候(第四节)表现的有些不够冷静,还有什么比leader的慌乱的情绪(只是情绪)更打击士气呢?

(2)前三节不是一定要每球强打的,就是说,你得选择队友不爽的时候发力强攻(尤其是第四节后半段,前面让范肚里骂娘也无所谓,只要最后赢球),别当炮灰,让SWIFT当。而且如果要强打,就一定要打进!怎么进,我不知道,但是我知道的是:如果你的所有精力集中在一个点上的时候,你就不会听到观众席上的噪音,甚至防守你的人都不向以前那么有力了,。这是真的!我试过!如果你假攻真传,那么,你要这样想:“他肯定不进(千万别看这蓝筐祈祷,这没用),不过球会落在哪里呢?”提前移动吧!早起的鸟儿有虫吃。把中国人的聪明劲好好的利用起来!

(3)不要简单的执行教练的战术(那样就失却了篮球的乐趣了),范其实是希望你们能够“领会精神”,你在 场上,可多想想,如果这样变化一下。。。,那个大家伙会不会跟出来,如果跟出来。。。。,如果老子来个。。。等等,如果你在场上思考,就会发现,原来防守的人都挺傻的,也许赢球的关键就是,把对手变傻,呵呵,可能不太容易,但每场有那么3、4次,足以确保你的统帅力了。更重要的是简单的执行战术会使你的技术发展方向定型,你应当向大梦的模式发展(谁说姚明不适合?站出来!),而不是肌肉男。(相信我,范肯定不会这样对你说,虽然他每天都在被窝里祈祷你变身大梦)

(4)思想基础:比分落后不是你的事,更不是为球迷和教练有歉疚心理(哎呀,谁仍的鸡蛋),更不必为了失败而自责!那是幼稚的(想想以前的球员交换-吉姆杰可逊,谁会对你说对不起?),输球是教练的事,你在为老板打工,你的数据已经对的起那份工资了。在场上就一个球一个球地打,不要想“输球。。。”,要催眠自己:“要是这个球进了,我们就赢了。。。,如果防住他们这个球,我们就赢了。。。,反复强化训练。“现在我总算知道邓肯为什么要学心理学了。。。呵呵!

(5)你一个人永远不可能扛起全队的!麦蒂的神话我从来不赞成,这样不好!没有人能够一个人办成所有的事{看看你:投篮(60%+),扣篮,盖帽(谁的漏你都想补,你都把那些家伙弄懒了),挡拆(挡完了,那些家伙早忘了你在哪里了),罚蓝(85%+)(还不够吗?),姚明你快要累死了}。这样都赢不了。。。,说明什么?就象蓝网的一名队员所说的:“姚明是个大家伙,但他无法一个人战胜我们全队!”,你得把那些家伙带起来,对懒惰的家伙就吼她娘。谁让我比赛以后不痛快,我就让他先不痛快!我的职业是IT项目经理,我的工作座右铭就是:“如果你想达到目标,就得让别人作你想让他们作的事情,不管用什么方法,尽管他们可能不想那么做”,所以,你还的分析一下队友的性格特点,在什么时候会发飚,什么是否发骚等等。你通过传球来决定让谁打!

另外,不要用肢体语言指挥你的队员,这样次数多了我感觉不好,可能是错觉也许。

Friday, November 18, 2005

引自-石头的歌声

发现那个大小写的问题其实不成问题。通过在model option naming convertion 界面中,把 code character case 设置成uppercase,preview就不会有引号了。

顺便发现了几个小的改进,

1)查看整个PDM的properties的时候,有一个preview项,直接可以看到全部的generate 出来的脚本,不用象以前一样一定要Database generation database了。

2)Preview 的时候,多了一个view generation option的选项,可以直接指定生成选项。以前我就老是找不到这个选项。发现要到generate database的时候设置一下然后点“应用”退出,实在驴头不对狗嘴。

3)执行SQL的地方增加了一个合成SQL的地方,可能是学sql server的查询分析器的作法吧。虽然简陋的可以,但也算有小进步,特别表扬一下。因为建模的人一般有查数据的要求,执行SQL脚本的功能其实是挺有可用的。

4)其它的:工具栏终于学会堆放得整齐一点了,V9里面就是一条一条的垒在那儿,调好了下次又变得乱七八糟。支持的DBMS明显与时俱进了,连oracle92,oracle10g都有了!

只准备随便说两句,又花了我小半个时,下次用得深入点再抛砖引玉吧。

Hi Russell,

Adding to what Sugumar may have been alluding to previously, I would also

recommend against migrating to SQL2000 for the following reasons:

1. MS-SQL Server in general is not as robust a package as Oracle is,

especially for a warehousing operation. If you are looking for

alternatives, might I suggest taking a serious look at SAPDB (commonly used

for APO)?

2. The long-term costs of MS-SQL Server and Oracle are often not easily

recognized. SAPDB, btw, is still freely licensed as far as I know.

Other costs to consider:

Oracle DBAs tend to require a higher salary, but that is why there are

more Oracle DBAs then MS-SQL DBAs.

Backup / Restore operations are much better with Oracle than in SQL2000.

SQL2000 does not have, RAC, RMAN, exp/imp, nor a concept of archive logging.

SQL2000 does have a VDI (Virtual Device Interface) through which one may

take snapshot backups, but this is very high-end function supported only by

a few high-end disk vendors - EMC, HDS, IBM, etc. Basic backup & restore

operations, which are what 80% of IT shops out there do not have a grasp on,

are the point at which one should start. Once a base is built, then one can

go on into the higher grounds of snap-shotting and other methods to reduce

backup time-frames. SQL2000 has a online backup method, but it still pales

in comparison to the other tools and utilities offered by Oracle (and

brbackup/brarchive).

DB upgrades - with Oracle, you are much less likely to be tied down to a

specific O.S. Whereas, Microsoft may say, okay you want to run MS-SQL 2003?

then you need to upgrade your O.S. to Windows 2003 Super Advanced Server

with a pricetag of $$$. Upgrading Oracle may also force you to upgrade

your O.S., but more than likely it will not cost as much as the Windows

upgrade. Furthermore, Oracle is more likely to support older O.S. revisions

than Microsoft does (has to).

DB maintenance - Oracle has several tools to help make the database run

smoothly. One can easily rebuild indexes, add/remove redo logs with ease,

set the ratio of free space for later table deletions and insertions

(PCT_FREE / PCT_USED), etc. SQL Server has the DBCC utility.

DBA work - Oracle DBA work is often done via the command line. SQL2000 is

nearly always GUI-based operation. But, since we are talking about SAP

here, this is mostly a mute point. Still, when DB access is necessary, I

personally find it much easier to quickly type SQL statements via CLI, than

to what for a GUI to load up stuff... particulary in SAP where you have

1000s of tables and indexes to work with. The Enterprise Manager of SQL2000

is not the best utensil to work with... neither is the Query Manager.

3. SQL Server limits your O.S. platform to Windows. For me, as a sys-admin

/ DBA, this would drive me nuts. All future upgrades (hardware / software)

must take this into account.

4. Even though the site is a Microsoft shop, that does not mean there is

not room for alternative, and often better choices out there.

5. If cost IS a major concern, why not (Suse) Linux + SAPDB?

Just some things to ponder. In my experiences of using MS-SQL Server 7 &

2000, and Oracle 7.x+, if I were a DBA, I would still pick Oracle. Even if

it had a higher sticker price initially.

Mark Brown

Bakbone Software

-----Original Message-----

From: Russell Brown via sap-r3-bw [mailto:sap-r3-bw@OpenITx.com]

Sent: Wednesday, July 30, 2003 4:33 PM

To:

Subject: [sap-r3-bw] Re: BW - converting from Oracle to MS SQL2000 database

platform

Read today's most popular SAP career discussion.

http://SAP.ITtoolbox.com/r/da.asp?r=4587

Hi Sugumar,

There are two reasons:

1) The company is a Microsoft and SAP shop and therefore would like to

standardise on MS SQL 2000.

2) The costs of maintaining Oracles licenses is huge compare to the cost of

MS SQL 2000

My concern with this migration is that MS SQL 2000 is not thoroughly

confirmed as a data warehousing platform.

Any assistance would be appreciated.

Regards,

Russell.

-----Original Message-----

From: SUGUMAR SELAM via sap-r3-bw [mailto:sap-r3-bw@OpenITx.com]

Sent: 23 July 2003 16:36

To: Russell Brown

Subject: [sap-r3-bw] Re: BW - converting from Oracle to MS SQL2000

database platform

Read today's most popular SAP news story.

http://SAP.ITtoolbox.com/r/da.asp?r=3628

Could you please tell us why your company moving

from Oracle to MS SQL 2000.

请教SQL server 2000 远程连接的问题!

我在windows 2000 Server中安装了sqlserver2000 中文版。安装过程中,在安全注册时选了“系统用户注册”。这下就麻烦了。我在Intranet得另外一台电脑上用ODBC创建到该sqlserver连接时报:“未与信任的SQL Server连接并相关联”,我先后试过如下几种方式:

(1)在客户机上建立了一个与数据库服务器完全相同名字的用户,然后login到服务器系统中,再用odbc连接数据库,相同提示。

(2)在数据库服务器得企业管理器中修改数据库服务组的注册方式为用SQL Server得用户登陆,但当我点击确定时,甚至在服务器上就报相同提示。

(3)在数据库服务器的业务数据库中建立新的数据库用户,并指定为使用SQL Server用户注册,然后在客户机上用ODBC连接,相同提示。

(4)在数据库服务器得企业管理器中建立一个新的服务器组,并设置其为使用SQL server用户注册,但是无法在服务器组下面建立业务数据库。哎,是不是安装SQL Server时就不能选“系统用户注册”,难道是:“欲练神功,必先。。。重装SQL Server”? 七各位大虾出谋划策!

Error message (English)

Connection failed: SQL State '28000' SQL Server Error: 18452 [Microsoft][ODBC SQL Server Driver][SQL Server]Login failed- User: Reason: Not associated with a trusted SQL Server connection.

Thursday, November 17, 2005

Glossary

What do all the TLAs and jargon really mean?

You can contact Nigel Pendse, the author of this section, by e-mail on NigelP@olapreport.com if you have any comments, observations or user experiences to add. Last updated on April 1, 2005.

This report uses many terms that may not be familiar to you and others that you may know, but which are used in a particular way in the report. Some technical definitions are simplified here and experts may be offended by our latitude.

4GL

Fourth generation language. Although easier to use than older (3GL) languages like COBOL, these are still aimed primarily at IT professionals. Several OLAP products include server 4GLs for complex application processing.

ActiveX

Microsoft technology for deploying Windows programs over the Web. Security considerations often limit its use to intranets. Used in a number of OLAP Web products, but fading in popularity.

Ad hoc analyses

End-users being able to generate new or modified queries with significant flexibility over content, layout and calculations. Should allow simple new ratios, variances and groupings to be defined in a point-and-click fashion, and for data to be filtered or ranked. End-users should not have to pre-define such requirements.

Agent

A program to perform standard functions autonomously. Typical uses are for automated data loading and exception reporting. Not to be confused with data mining.

Aggregate tables

Tables (usually held in a relational database) which hold pre-computed totals in a hierarchical multidimensional structure. Most tools allow “sparse aggregates”, which means that only a subset of the aggregate tables need be created, with other aggregates being generated on-the-fly from them as needed.

AIX

IBM’s version of Unix.

API

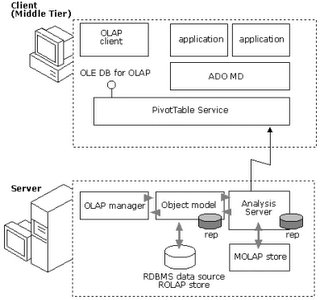

Application Programming Interface. A standard that allows programs from multiple vendors to be integrated. In OLAP terms, most OLAP server vendors have their own APIs, and the OLAP Council had been working on a cross-product API ever since it was formed in early 1995, but with little success. However, the later Microsoft OLE DB for OLAP API is already the most widely adopted by both OLAP client and server vendors, and has become the de facto standard.

APL

A Programming Language. The first multidimensional computer language, and the title of a book by Ken Iverson, published in 1962. APL is still in limited use, particularly in older forecasting and planning applications, but is rarely used for new systems.

Applets

Small programs that run in Web browsers; usually written in Java. Typically used to enhance the human factors available in HTML.

ASF

Analytical Solutions Forum, the business intelligence trade body that, in October 1999, replaced the ineffective OLAP Council which had been in existence since early 1995. The ASF managed the remarkably achievement of being much less effective than the failed OLAP Council and eventually disappeared, its solitary achievement having been the issuing of a solitary press release announcing its formation.

b2b

Business to business, conducted over the Internet

b2c

Business to consumers, conducted over the Internet. A well known British venture capitalist, Michael Jackson, was quoted (well before the dotbomb collapse) as saying that b2c is the fastest way of transferring money from venture capitalists to advertising agencies and the media.

Billion

Thousand million (109), rather than the original European meaning of million million (1012).

BPM

Business Performance Management; also known as CPM and EPM. The combination of planning, budgeting, financial consolidation, reporting, strategy planning and scorecarding tools. Most vendors using the term do not offer the full set of components, so they adjust their version of the definition to suit their own product set.

BPR

Business Process Re-engineering. A fundamental corporate reorganization based upon the processes that deliver value to customers. It typically involves re-orienting a business from a product or location viewpoint to a customer focus.

Bubble-up exceptions

Exception conditions displayed at a consolidated level, but based on tests performed at a more detailed level. Used to highlight potentially important variances at a detail level that cancel out and are therefore masked at a more consolidated level. Offered in a few OLAP reporting tools.

Cell

Data point defined by one member of each dimension of a multidimensional structure. Most potential cells in multidimensional structures are empty, leading to ‘sparse’ storage.

Client

A single user computer which is connected via a network to, and works in conjunction with, one or more shared computers (called servers), with data storage and processing distributed between them in one of a number of ways. An OLAP client will not normally store data, but will do some processing and most of the presentation work, with the server handling data storage and the rest of the processing. A client may be single or multi-tasking. Can include both thin and thick clients.

COP

Column-Oriented Processing. This is a class of database that is quite different to, but is nevertheless sometimes confused with, OLAP. COP databases perform high speed row counting and filtering at a very detailed level, using column-based structures, rather than rows, tables or cubes. COPs analyze detail and then occasionally aggregate the results, whereas OLAPs are largely used to report and analyze aggregated results with occasional drilling down to detail.

Although less well known and recognized than OLAP, COP databases have also been in use for more than 30 years (for example, TAXIR), which means that, just like OLAP, they predate relational databases. COP products are used for very different applications to OLAP and examples include Alterian, Sand Nucleus, smartFOCUS, Sybase IQ, Synera, etc.

CPM

Corporate Performance Management; see BPM.

Cube

A multidimensional structure that forms that basis for OLAP applications. Despite the name, most OLAP cubes have many more than three dimensions. in multidimensional OLAP (or MOLAP) databases, cubes are created and stored physically, whereas in relational OLAPs, cubes are a virtual concept based on a star or snowflake schema. A variety of corss-dimensional calculations and aggregations are possible within a cube, and the dimensions can usually be pivoted in reports.

Database explosion

The huge increase in database size that can result from pre-calculating a large proportion of all the possible aggregations and other pre-defined calculations. Databases can grow a hundred-fold if highly dimensioned cubes are fully pre-calculated. Despite a widespread myth, database explosion has nothing whatever to do with MOLAP or ROLAP architectures, and is just as likely to occur with either.

Data mining

The process of using statistical techniques to discover subtle relationships between data items, and the construction of predictive models based on them. The process is not the same as just using an OLAP tool to find exceptional items. Generally, data mining is a very different and more specialist application than OLAP, and uses different tools from different vendors. Normally the users are different, too. OLAP vendors have had little success with their data mining efforts.

DBMS

DataBase Management System. Used to store, process and manage data in a systematic way. May use a variety of underlying storage methods, including relational, multidimensional, network and hierarchical.

DDE

Direct Data Exchange. An older Microsoft Windows technology for automatically moving information between applications resident in memory via the clipboard.

Defragment

Process of reorganizing files on disk to make segments contiguous and to recover wasted space between segments. Improves performance, saves disk space and may improve data security should a hardware failure occur. Required when disk files are frequently updated in place, particularly if portions are dynamically compressed.

Dense

The majority, or significant minority (at least ten percent), of potential data cells actually occupied in a multidimensional structure.

Desktop OLAP

Low priced, simple OLAP tools that perform local multidimensional analysis and presentation of data downloaded to client machines from relational or multidimensional databases. Web versions often move the desktop processing to an intermediate server, which increases the scalability but the functionality remains comparable to (or less than) the desktop version.

DHTML

Dynamic HTML. A modern form of HTML, extended with JavaScript, that provides a degree of interactivity in ‘zero footprint’ solutions where no code need be installed on the client. Requires a modern browser. Gaining in popularity for Internet OLAP deployments, but often incurs serious performance penalties.

DLL

Dynamic Linked Library. Used to integrate multiple programs and provide the impression of a single product. Can also be used to hold function libraries.

DOLAP

Variously, Desktop OLAP or Database OLAP. The Desktop OLAP variant is more commonly used, though with the move to the Web, true DOLAP is now less common than in the 1990s.

DSS

Decision Support System. Application for analyzing large quantities of data and performing a wide variety of calculations and projections.

Decision Support Services. The name used by Microsoft for the beta 3 version of what was subsequently renamed SQL Server OLAP Services and then SQL Server Analysis Services, and was originally code-named Plato.

EIS

Variously defined as Executive/Enterprise/Everyone’s Information/Intelligence System/Service/Software. A category of applications and technologies for presenting and analyzing corporate and external data for management purposes. Extreme ease of use and fast performance is expected in such systems, but analytical functionality is usually very limited.

EPM

Enterprise Performance Management; see BPM.

ERP

Enterprise Resource Planning. The modern name for expensive, integrated ledger systems. Despite the name, these systems are rarely good for planning applications.

ETL

Extraction, Transformation and Loading. Activities required to populate data warehouses and OLAP applications with clean, consistent, integrated and probably summarized data.

Euro (€)

The European common currency, adopted by 12 of the 15 members of the European Union, as part of European Monetary Union (EMU). The euro comes into common circulation from the beginning of 2002.

FASMI

Fast Analysis of Shared Multidimensional Information. The summary description of OLAP applications used in The OLAP Report, and now very widely referenced elsewhere.

Gigabyte (Gb)

Strictly speaking, 1024 megabytes. In this report, it is normally used in the colloquial sense of 1000 megabytes or 1 billion bytes.

Groupware

Application for allowing a workgroup to share information, with updates by each user being made available to others, either by automated replication or concurrent sharing.

GUI

Graphical User Interface (such as Windows or the Macintosh).

HOLAP

Hybrid OLAP. A product that can provide multidimensional analysis simultaneously of data stored in a multidimensional database and in an RDBMS. Becoming a popular architecture for server OLAP.

HP-UX

Hewlett-Packard’s version of Unix, running on HP-9000 computers.

HTML

Hyper Text Mark-up Language. Used to define Web pages.

Hypercube

An OLAP product that stores all data in a single cube which has all the application dimensions applied to it.

IPO

Initial Public Offering. The flotation of a private company on a stock exchange. Public OLAP companies are nearly always listed on NASDAQ, and typically raise about $30m in their IPO.

IT

Information Technology. Sometimes used as a synonym for the computer professionals in an organization. Also sometimes known as IS (Information Systems) or DP (Data Processing).

Java

A 32-bit language used for Web applications. Usually, Java ‘applets’ are dynamically downloaded if they are needed in a session, rather than being stored locally. Wildly popular in computer companies’ marketing departments, but not so widely used commercially so far. Client-side Java is now falling out of popularity in OLAP tools.

JOLAP

The proposed new Java OLAP API, which will allow OLAP metadata and data to be created and queried. Sponsored by Hyperion/IBM and Oracle. JOLAP is still going through a long drawn-out specifications process, so no products support it yet.

Kilobyte (Kb)

Strictly speaking, 1024 bytes. In this report, it is normally used in the colloquial sense of 1000 bytes.

LAN

Local Area Network. High speed connection between desktop PCs and server machines, including file servers, application servers, print servers and other services.

Linux

Unix-like, open source operating system. Few OLAP products run on it, and sales have been minimal on that platform.

Maintenance

Fee charged by software suppliers to cover bug fixes, enhancements and (usually) helpline services. Typically charged at around 20 percent of the license fees, though some vendors offer tiered levels of service at different rates.

MDAPI

The OLAP Council’s stillborn multidimensional API, which reached version 2.0. The earlier, abortive version was called the MD-API (with a hyphen). No vendors supported even the 2.0 version (which was released in January 1998), and this ‘standard’ has now been abandoned.

MDB

Multidimensional Database A product that can store and process multidimensional data.

MDX

Multidimensional expression language, the multidimensional equivalent of SQL. The language used to define multidimensional data selections and calculations in Microsoft’s OLE DB for OLAP API (Tensor). It is also used as the calculation definition language in Microsoft’s OLAP Services.

Megabyte (Mb)

Strictly speaking, 1024 kilobytes. In this report, it is normally used in the colloquial sense of 1000 kilobytes or 1 million bytes.

Metadata

Data about data. How the structures and calculation rules are stored, plus, possibly, additional information on data sources, definitions, quality, transformations, date of last update, user access privileges, etc.

Minicube

A subset of a hypercube, with fewer dimensions than the encompassing hypercube. The hypercube will consist of a collection of logically similar minicubes.

Model

A multidimensional structure including calculation rules and data.

MOLAP

Multidimensional [database] OLAP. We prefer to avoid the use of this term, because all OLAPs, are, by definition, multidimensional, and therefore prefer the more explicit MDB.

MPP

Massively Parallel Processing. A computer hardware architecture designed to obtain high performance through the use of large numbers (tens, hundreds or thousands) of individually simple, low powered processors, each with its own memory. Normally runs Unix and must have more than one Unix kernel or more than one ‘ready to run’ queue.

Multicube

An OLAP product that can store data in the form of a number of multidimensional structures which together form an OLAP database. May use relational or multidimensional file storage.

Multidimensional

Data structure with three or more independent dimensions.

NC

Network Computer. A Web terminal with no local storage of programs or data. Includes a Web browser with Java capabilities. Not very relevant in the OLAP context.

Non-procedural

A programming approach whereby the user specifies what has to be done, but not the sequence of actions. In some cases, the system will also determine when to perform the operation. This approach is simpler to specify, but less predictable (and sometimes less efficient) than the alternative procedural approach.

ODAPI

Open Database API. A Borland standard for database connectivity.

ODBC

Open Database Connectivity. A widely adopted Microsoft standard for database connectivity.

OEM

Original Equipment Manufacturer. A company that sells products (including software) under its own label that include technology licensed from another vendor. The original product name may or may not be retained.

OLAP

On-Line Analytical Processing. A category of applications and technologies for collecting, managing, processing and presenting multidimensional data for analysis and management purposes.

OLAP Council

Ineffective former industry trade body which promoted OLAP and maintained the only standard OLAP benchmark; however, many major OLAP vendors were never members or left well before its demise. It evolved into the even more ineffective Analytical Solutions Forum which did nothing and died almost immediately. The defunct OLAP Council created a useful glossary of technical terms which is sadly no longer online.

OLAP product

A product capable of providing fast analysis of shared multidimensional information. Ad hoc analysis must be possible either within the product itself or in a closely linked product.

OLE

Object Linking and Embedding. A Microsoft Windows technology for presenting applications as objects within other applications and hence to extend the apparent functionality of the host (or client) application. Now on version 2.0.

OLE DB for OLAP

Microsoft’s OLAP API, effectively the first industry standard for OLAP connectivity. Used to link OLAP clients and servers using a multidimensional language, MDX.

OLTP

On-Line Transaction Processing. Operational systems for collecting and managing the base data in an organization, such as sales order processing, inventory, accounts payable, etc. Usually offer little or no analytical capabilities.

OO

Object-Oriented. A method of application development which allows the re-use of program components in other contexts.

PDA

Personal Digital Assistant, such as a Palm, PocketPC or Psion handheld computer. Can be linked to mobile phones or the Internet for sending and receiving e-mails or faxes or even browsing the Web. Much hyped, but little used, for OLAP purposes.

Platform

A combination of hardware and system software. Some OLAP vendors also refer to their products as ‘application platforms’, meaning that they can be used to build custom or standard applications.

Plug-in

Locally stored helper programs that are used to augment browser capabilities. Require different versions for different platforms, and possibly for different browsers, but currently capable of delivering better human factors and more functionality than Java applets. Occasionally used in OLAP Web products.

Procedural

A programming approach whereby the developer specifies exactly what must be done and in what sequence.

Q&R

Query and Reporting tool. Normally used for list-oriented reporting from relational databases.

RDBMS

Relational Database Management System. Used to store, process and manage data arranged in relational tables. Often used for transaction processing and data warehouses.

ROLAP

Relational OLAP. A product that provides multidimensional analysis of data, aggregates and metadata stored in an RDBMS. The multidimensional processing may be done within the RDBMS, a mid-tier server or the client. A ‘merchant’ ROLAP is one from an independent vendor which can work with any standard RDBMS.

SDK

Software Development Kit. A set of programs that allows software developers to create products to run on a particular platform or to work with an API.

Server

A computer servicing a number of users. It will usually hold data and do processing on the data. An application server may not necessarily store data and a file server may not necessarily do any processing.

Servlets

Java programs running on demand on servers.

Shelfware

Surplus software licenses which are bought, but not deployed. Some products actually have more shelfware than deployed seats.

Simultaneous equation

A set of calculations with circular interdependencies. Few OLAP products can resolve these automatically.

SKU

Stock Keeping Unit: a term used by retailers to identify the lowest level of product detail. Such level of detail is often not included in OLAP applications.

SMP

Symmetrical Multi Processing. A computer hardware architecture which distributes the computing load over a small number of identical processors, which share memory. Very common in Unix and Windows NT/2000 systems.

Snowflake schema

A variant of the star schema with normalized dimension tables.

Sparse

Only a small proportion (arbitrarily, less than 0.1 percent) of potential data cells actually occupied in a multidimensional structure.

Sparse aggregates

To avoid database explosion, larger OLAP databases must be only partially pre-calculated. A key part of this is to create only a minority of the possible aggregates, with the others being generated on-the-fly from the nearest available aggregates.

SPL

Stored Procedure Language. Database server based programs that can be invoked from the client or scheduled are usually called stored procedures.

SQL

Structured Query Language. The almost standard data structuring and access language used by relational databases. MDX is based loosely on SQL, though it requires a different skills set.

Star schema

A relational database schema for representing multidimensional data. The data is stored in a central fact table, with one or more tables holding information on each dimension. Dimensions have levels, and all levels are usually shown as columns in each dimension table.

Summary tables

Often used in RDBMSs to store pre-aggregated information, rather than holding it in the same table as the base data. Used to improve responsiveness. Sometimes also called aggregate tables.

SVR4

System V Release 4, a standard version of Unix used by a number of manufacturers as the basis for their own Unix variants.

TCO

Total Cost of Ownership. Includes hardware, software, services and maintenance.

Tensor

The code-name for Microsoft’s OLAP API, a set of OLE COM objects and interfaces designed to add multidimensionality to OLE DB. It has become the de facto industry standard multidimensional API, being adopted both by most front-end OLAP tools vendors and by several other OLAP database vendors. The official name is OLE DB for OLAP, and the 1.0 specification was first published in February 1998.

Terabyte (Tb)

Strictly speaking, 1024 gigabytes. In this report, it is normally used in the colloquial sense of 1000 gigabytes or 1 trillion bytes.

Thin client

A form of client/server architecture in which no data is stored and relatively little processing occurs on the client machine, which may in extreme cases be a Network Computer (NC). Other forms include the NetPC, which is a Windows machine with limited local storage and configurability. The thin client approach is a very popular concept, but its use should be viewed with caution for sophisticated analytical applications or power user access. Note that ‘thin clients’ often actually require significant processing power and RAM, as well as a high bandwidth network connection, and in the business context, are usually fairly high specification PCs with hard disk drives. See also zero-footprint.

TLA

Three Letter Acronym, like this one.

TP

Transaction Processing. The operational systems used to collect and manage the base data of an organization. See also ERP and OLTP.

VAR

Value Added Reseller. A company that resells another vendor’s product together with software, applications or consulting services of its own, thus adding value. The original product’s name is usually still used.

VC

Venture Capitalist. Source of investment capital for small, growing companies. Usually have an interest in companies going public in order to realize their investment.

VGA

Video Graphic Array. An IBM PC display standard with 640x480 pixels. Introduced with the original PS/2, it has largely been superseded by higher resolution screens, usually referred to as Super VGA and X[V]GA, typically with 800x600 or 1024x768 pixels. With larger monitors, sizes of 1280x1024 and 1600x1200 are becoming more common. Objects of fixed pixel size, designed for VGA screens, look very small and may be hard to read when displayed on higher resolution monitors.

Virtual memory

Apparently extended memory on a computer, consisting partly of real memory (RAM) and partly of disk space. A technique to handle programs and applications that are too large to fit into real memory. Can degrade performance if used too heavily.

WAN

Wide Area Network. Usually, two or more geographically dispersed LANs connected by lower speed links. Can cause problems with client/server applications that transmit large quantities of data between servers and clients.

XML

eXtensible Markup Language, the emerging standard for defining, representing and dynamically sharing information across the Internet. Already widely used in OLAP products.

XML for Analysis

Microsoft’s new multi-platform version of OLE DB for OLAP that allows MDX queries to be handled using XML documents. Not yet in widespread use, but supported by Hyperion Essbase 7 and will be the native query API Microsoft for SQL Server 2005.

Zero-footprint

Web architecture not requiring any software to be installed locally. This usually means HTML or DHTML, but some vendors also make this claim for software that uses Java applets.

Analyses

Product reviews

Case studies

Subscribe

Home

FAQ

All information copyright ©2005, Optima Publishing Ltd, all rights reserved.

brio与BO及Cognos的比较

--------------------------------------------------------------------------------

作者:miller 日期:2005年06月30日 浏览次数:663

下面是具体产品的比较,此为转载,只供参考:

这是Brio与Cognos的比较[转载]

Brio与Cognos相比,其优势在于 :

n 产品的集成性

Brio:Brio的系列产品是完全集成的,界面都相同,在一个产品里提供了所有的功能。并且,所有产品都使用同一种文件格式,所以使得各种平台上的产品、Client/Server和Web模式下的产品都能够共享查询和报表。

Cognos:Cognos的产品之间是非常混乱的。各种产品的界面都不相同,甚至Impromptu和 PowerPlay 的Client/Server版与其相应的Web版之间界面都不相同。实现某个功能常常需要从一个产品切换到另一个产品,使得用户经常不知道哪个产品提供了其所需要的功能。

n 元数据管理

Brio:通过BrioQuery的开放元数据解释器(OMI: Open Metadata Interpreter),用户能非常方便地查看到关于数据的重要信息,并且能访问企业已有的元数据。

Cognos: Cognos不能利用已有的元数据,需要IT花费数个星期的时间重新构造元数据。

n OLAP访问(MDD)

Brio: Client/Server和Web模式下,Brio都提供脱机分析功能,因此提高了用户的工作效率,减轻了OLAP服务器的压力。对Metacube和Essbase等所有多维数据库提供支持。

Cognos: Cognos的Web browser客户端产品不能支持脱机分析,Cognos的报表和Web查询工具不支持OLAP服务器导航功能,不能支持Metacube,UNIX客户端不支持Essbase。

n IT管理

Brio: Brio不需要定义数据立方体,Brio读取数据仓库中的数据,并能利用现有的数据结构。

Cognos: Cognos需要创建数据立方体和专门的元数据,对IT部门其实是一个重复的不必要的工作。

n 公司的生存能力

Brio: Brio 与包括服务器端OLAP供应商(如Hyperion, IBM和 Microsoft)在内的业界领先的合作伙伴保持了良好的关系。

Cognos: Cognos已经进入OLAP服务器市场,与曾经是良好合作伙伴(包括Hyperion, IBM 和 Microsoft)供应商发生了正面对抗。

n 任意钻取与预定义钻取路径

Brio: Brio能够实现任意钻取以及预定义路径钻取的功能,使得数据分析具有很大的灵活性。此外,Brio还允许用户增加字段,因此,用户不必一而再地请求IT帮助。

Cognos: 用户只限于在预先定义好的路径上进行钻取。Cognos的PowerCube层次设计是由IT完成的,因此,如果他们没有?#27491;确?#23450;义层次,用户就只能束缚在一条无效的钻取路径上。

n 分析型应用构造

Brio: Brio能够使用控件、布局工具和实时的可嵌入的报表组件创建 EIS的面板。使用JavaScript语言开发所有平台以及Client/Server和Web方式的应用。其EIS区提供了很好的交互性。

Cognos: Portfolio与Impromptu 或 PowerPlay没有集成,且只能在Windows环境下运行,限制了Web应用开发,也不能开发UNIX环境下的应用。用户不能通过Web访问EIS报表(Portfolio)。

n 平台支持

Brio: Brio 企业级的OnDemand Server支持 NT 和UNIX平台。

Cognos: Cognos的 Impromptu Web Query和 Web Reports 不支持UNIX,在 UNIX服务器上存放上的数据库、数据集市或数据仓库就变得不可用。

n 服务器的可伸缩性

Brio: OnDemand Server 6.0 支持负载平衡以及容错。

Cognos: 三种服务器产品中只有一种PowerPlay Enterprise Server支持负载平衡和容错,Impromptu Web Query Server and Impromptu Web Reports Server不支持负载平衡和容错。

n Web客户端

Brio: Brio.Insight 和 Brio.Quickview支持与OnDemand Server和代理服务器间的SSL通信,能支持大型网络环境以及Extranet解决方案。

Cognos: 三种服务器产品中只有一种PowerPlay Enterprise Server支持SSL和代理服务器, Impromptu Web Query Server和 Impromptu Web Reports Server 不能够支持。

这是Brio与Business Objects比较[转载]

Brio与Business Objects相比,其优势在于:

?基于Web的分析型应用的构建与发行

Brio: 采用JavaScript语言,JavaScript是多平台支持的,能在 Web 和 C/S环境运行。

BO:BO采用的VBA scripts,其是否能在 Web或 Windows 32-bit 环境以外的环境运行目前尚不确定。

EIS功能

Brio: Brio EIS 区是一个强大的可编程容器,能够控制并联接Brio对象。Brio能够使用控件、布局工具和实时的可嵌入的报表组件创建丰富的 EIS的面板。

BO: Business Objects 在产品中并没有注重 EIS。

脱机分析功能

Brio: Brio Web 用户允许联机或者脱机方式分析数据,最大限度提高用户效率,减少网络流量。

BO:Business Object的 Web Intelligence产品只能进行联机分析。

元数据管理

Brio: Brio能够访问在任何数据源中的元数据。Brio能充分利用现有的元数据,减轻了IT部门的维护工作量。此外,Brio提供了可定制的标注对话框,使管理员能让用户了解尽可能多的元数据信息,从而使决策分析更加清晰。

BO:BO要求元数据必须存放在Universe()中,是一个自有的解决方案。虽然能够一次性地从一些源数据中倒入元数据,但不具有实时、动态保持一致的功能。

Web功能

Brio: Brio的Web客户端具有与Client/Server结构的客户端完全相同的功能。Web和 C/S用户共享文件,功能不受丝毫影响。

BO:WebIntelligence用户每当进行格式上的改变、下钻或重定位一个报表都必须等待生成HTML页面。WebIntelligence用户打开由C/S用户创建或调度程序推送过来的文档时,只具有查看或者刷新功能,也不能将文件本地保存。

直观性的界面

终端用户常常选用Brio而不选用BO的一个重要原因是Brio产品的界面更加友好、直观。因而减少了培训费用,提高了工作效率,减轻了IT的负担。

领先的企业信息门户

企业报表

Brio:Brio能够提供完整的企业报表解决方案。

BO:BO没有企业报表工具。

从Web访问主流 OLAP数据库功能

Brio:Brio的 Web用户能访问MDD,而且 Web和 C/S用户能够脱机访问从MDD检索来的数据。

只有C/S用户能够访问MDD,而且这些用户不能通过Universe访问MDD。

UNIX 服务器

Brio: Brio的服务器能支持AIX, HP, 和 Solaris。

BO:BO才开始着手推出UNIX服务器。

更加灵活地与现有的安全系统集成

Brio: Brio能选用数据库安全或者定制的Java Bean验证进行安全管理,灵活性好。

BO: BO只能将数据库用户组倒入Universe进行。

任意钻取以及预定义钻取路径

Brio: Brio允许用户进行任意钻取或者预定义路径钻取。

BO: 需要IT管理员预定义钻取路径。

Mark almost got the URL,

it's actually:http://localhost/odshtml/Brio.html

If that does not work, check that IIS is running, perhaps try replacing "localhost" with the machine name or actual IP address in the URL!

Hope this works, Regards,Brenden

Slowly Changing Dimensions Are Not Always as Easy as 1, 2, 3

The three fundamental techniques for changing dimension attributes are just the beginningBy -----------------------------------------------------------------------------------------

Margy Ross

Ralph KimballTo kick off our first column of the year, we're going to take on a challenging subject that all designers face: how to deal with changing dimensions. Unlike most OLTP systems, a major objective of a data warehouse is to track history. So, accounting for change is one of the analyst's most important responsibilities. A sales force region reassignment is a good example of a business change that may require you to alter the dimensional data warehouse. We'll discuss how to apply the right technique to account for the change historically. Hang on to your hats — this is not an easy topic.

QUICK STUDY

Business analysts need to track changes in dimension attributes. Reevaluation of a customer's marketing segment is an example of what might prompt a change. There are three fundamental techniques.

Type 1 is most appropriate when processing corrections; this technique won't preserve historically accurate associations. The changed attribute is simply updated (overwritten) to reflect the most current value.

With a type 2 change, a new row with a new surrogate primary key is inserted into the dimension table to capture changes. Both the prior and new rows contain as attributes the natural key (or durable identifier), the most-recent-row flag and the row effective and expiration dates.

With type 3, another attribute is added to the existing dimension row to support analysis based on either the new or prior attribute value. This is the least commonly needed technique.Data warehouse design presumes that facts, such as customer orders or product shipments, will accumulate quickly. But the supporting dimension attributes of the facts, such as customer name or product size, are comparatively static. Still, most dimensions are subject to change, however slow. When dimensional modelers think about changing a dimension attribute, the three elementary approaches immediately come to mind: slowly changing dimension (SCD) types 1, 2 and 3.

These three fundamental techniques, described in Quick Study, are adequate for most situations. However, what happens when you need variations that build on these basics to serve more analytically mature data warehouse users? Business folks sometimes want to preserve the historically accurate dimension attribute associated with a fact (such as at the time of sale or claim), but maintain the option to roll up historical facts based on current dimension characteristics. That's when you need hybrid variations of the three main types. We'll lead you through the hybrids in this column.

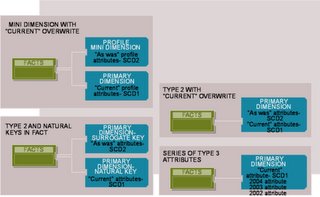

The Mini Dimension with "Current" Overwrite

When you need historical tracking but are faced with semi-rapid changes in a large dimension, pure type 2 tracking is inappropriate. If you use a mini dimension, you can isolate volatile dimension attributes in a separate table rather than track changes in the primary dimension table directly. The mini-dimension grain is one row per "profile," or combination of attributes, while the grain of the primary dimension might be one row per customer. The number of rows in the primary dimension may be in the millions, but the number of mini-dimension rows should be a fraction of that. You capture the evolving relationship between the mini dimension and primary dimension in a fact table. When a business event (transaction or periodic snapshot) spawns a fact row, the row has one foreign key for the primary dimension and another for the mini-dimension profile in effect at the time of the event.

Profile changes sometimes occur outside of a business event, for example when a customer's geographic profile is updated without a sales transaction. If the business requires accurate point-in-time profiling, a supplemental factless fact table with effective and expiration dates can capture every relationship change between the primary and profile dimensions. One more embellishment with this technique is to add a "current profile" key to the primary dimension. This is a type 1 attribute, overwritten with every profile change, that's useful for analysts who want current profile counts regardless of fact table metrics or want to roll up historical facts based on the current profile. You'd logically represent the primary dimension and profile outrigger as a single table to the presentation layer if doing so doesn't harm performance. To minimize user confusion and error, the current attributes should have column names that distinguish them from the mini-dimension attributes. For example, the labels should indicate whether a customer's marketing segment designation is a current one or an obsolete one that was effective when the fact occurred — such as "historical marital status at time of event" in the profile mini dimension and "current marital status" in the primary customer dimension.

Type 2 with "Current" Overwrite

Another variation for tracking unpredictable changes while supporting rollup of historical facts to current dimension attributes is a hybrid of type 1 and type 2. In this scenario, you capture a type 2 attribute change by adding a row to the primary dimension table. In addition, you have a "current" attribute on each row that you overwrite (type 1) for the current and all previous type 2 rows. You retain the historical attribute in its own original column. When a change occurs, the most current dimension row has the same value in the uniquely labeled current and historical ("as was" or "at time of event") columns.

You can expand this technique to cover not just the historical and current attribute values but also a fixed, end-of-period value as another type 1 column. Although it seems similar, the end-of-period attribute may be unique from either the historical or current perspective. Say a customer's segment changed on Jan. 5 and the business wants to create a report on Jan. 10 to analyze last period's data based on the customer's Dec. 31 designation. You could probably derive the right information from the row effective and expiration dates, but providing the end-of-period value as an attribute simplifies the query. If this query occurs frequently, it's better to have the work done once, during the ETL process, rather than every time the query runs. You can apply the same logic to other fixed characteristics, such as the customer's original segment, which never changes. Instead of having the historical and current attributes reside in the same physical table, the current attributes could sit in an outrigger table joined to the dimension natural key. The same natural key, such as Customer ID, likely appears on multiple type 2 dimension rows with unique surrogate keys. The outrigger contains just one row of current data for each natural key in the dimension table; the attributes are overwritten whenever change occurs. To promote ease of use, have the core dimension and outrigger of current values appear to the user as one — unless this hurts application performance.

Type 2 with Natural Keys in the Fact Table

If you have a million-row dimension table with many attributes requiring historical and current tracking, the last technique we described becomes overly burdensome. In this situation, consider including the dimension natural key as a fact table foreign key, in addition to the surrogate key for type 2 tracking. This technique gives you essentially two dimension tables associated with the facts, but for good reason. The type 2 dimension has historically accurate attributes for filtering or grouping based on the effective values when the fact table was loaded. The dimension natural key joins to a table with just the current type 1 values. Again, the column labels in this table should be prefaced with "current" to reduce the risk of user confusion. You use these dimension attributes to summarize or filter facts based on the current profile, regardless of the values in effect when the fact row was loaded. Of course, if the natural key is unwieldy or ever reassigned, then you should use a durable surrogate reference key instead.

This approach delivers the same functionality as the type 2 with "current" overwrite technique we discussed earlier; that technique spawns more attribute columns in a single dimension table, while this approach relies on two foreign keys in the fact table.

While it's uncommon, we're sometimes asked to roll up historical facts based on any specific point-in-time profile, in addition to reporting them by the attribute values when the fact measurement occurred or by the current dimension attribute values. For example, perhaps the business wants to report three years of historical metrics based on the attributes or hierarchy in effect on Dec. 1 of last year. In this case, you can use the dual dimension keys in the fact table to your advantage. You first filter on the type 2 dimension table's row effective and expiration dates to locate the attributes in effect at the desired point in time. With this constraint, a single row for each natural or durable surrogate key in the type 2 dimension has been identified. You can then join to the natural/durable surrogate dimension key in the fact table to roll up any facts based on the point-in-time attribute values. It's as if you're defining the meaning of "current" on the fly. Obviously, you must filter on the type 2 row dates or you'll have multiple type 2 rows for each natural key, but that's fundamental in the business's requirement to report any history on any specified point-in-time attributes. Finally, only unveil this capability to a limited, highly analytic audience. This embellishment is not for the faint of heart.

Series of Type 3 Attributes

Say you have a dimension attribute that changes with a predictable rhythm, such as annually, and the business needs to summarize facts based on any historical value of the attribute (not just the historically accurate and current, as we've primarily been discussing). For example, imagine the product line is recategorized at the start of every fiscal year and the business wants to look at multiple years of historical product sales based on the category assignment for the current year or any prior year.

This situation is best handled with a series of type 3 dimension attributes. On every dimension row, have a "current" category attribute that can be overwritten, as well as attributes for each annual designation, such as "2004 category" and "2003 category." You can then group historical facts based on any annual categorization.

This seemingly straightforward technique isn't appropriate for the unpredictable changes we described earlier. Customer attributes evolve uniquely. You can't add a series of type 3 attributes to track the prior attribute values ("prior-1," "prior-2" and so on) for unpredictable changes, because each attribute would be associated with a unique point in time for nearly every row in the dimension table.

Balance Power against Ease of Use

Before using hybrid techniques to support sophisticated change tracking, remember to maintain the equilibrium between flexibility and complexity. Users' questions and answer sets will vary depending on which dimension attributes are used for constraining or grouping. Given the potential for error or misinterpretation, hide the complexity (and associated capabilities) from infrequent users.

Hybrid SCDs can sometimes take you for a dizzying ride. Check with your ETL vendors to see if they support any of these techniques in their tools. Some do, which will greatly ease the burden on you.

Beware the Objection Removers

Is that sales pitch flying in the face of conventional wisdom? Start asking questions now.By

-->

-->

-->

-->

-->Ralph Kimball

An objection remover is a claim made during the sales cycle intended to counter a fear or concern that you have. Objection removers occupy a gray zone in between legitimate benefits and outright misrepresentations. While an objection remover may be "technically" true, it's a distraction intended to make you close the door on your concern and move forward in the sales process without careful thinking.

Objection removers crop up in every kind of business, but more often than not, the more complex and expensive your problem is, the more likely objection removers will play a role. In the data warehouse world, classic objection removers include:

You don't need a data warehouse, now you can query the source systems directly. (Whew! That data warehouse was expensive and complicated. Now I don't need it.)

You can leave the data in a normalized structure all the way to the end-user query tools because our system is so powerful that it easily handles the most complex queries. (Whew! Now I can eliminate the step of preparing so-called queryable schemas. That gets rid of a whole layer of application specialists and my end users can sort out the data however they want. And since I don't need to transform the source data, I can run my whole shop with one DBA!)

Our "applications integrator" makes incompatible legacy systems smoothly function together. (Whew! Now I don't have to face the issues of upgrading my legacy systems or resolving their incompatibilities.)

Centralizing your customer management within our system makes your customer matching problems go away and provides one place where all customer information resides. (Whew! Now I don't need a system for deduplication or merge-purge, and this new system will feed all my business processes.)

You don't need to build aggregates to make your data warehouse queries run fast. (Whew! I can eliminate a whole layer of administration and all those extra tables.)

Leave all your security concerns to the IT security team and their LDAP server. (Whew! Security is a big distraction, and I don't like dealing with all those personnel issues.)

Centralizing all IT functions lets you establish control over the parts of your data warehouse. (Whew! By centralizing, I'll have everything under my control, and I won't have to deal with remote organizations that do their own thing.)

Leave your backup worries behind you with our comprehensive solution. (Whew! I didn't really have a comprehensive plan for backup, and I have no idea what to do about long-term archiving.)

Build your data warehouse in 15 minutes. (Whew! Every data warehouse development plan I've reviewed recently has proposed months of development!)

Objection removers are tricky because they are true—at least if you look at your problem narrowly. And objection removers are crafted to erase your most tangible headaches. The relief you feel when you suddenly imagine that a big problem has gone away is so overwhelming that you feel the impulse to rush to the finish line and sign the contract. That is the purpose of an objection remover in its purest form. It doesn't add lasting value: it makes you close the deal.

So, what to do about objection removers? We don't want to throw the baby out with the bathwater. Showing the salesperson to the door is an overreaction. Somehow we have to stifle the impulse to sign the contract and step back to see the larger picture. Here are four steps you should keep in mind when you encounter an objection remover:

1. Recognize the objection remover. When your radar is working, spotting objection removers is easy. A startling claim that flies in the face of conventional practice is almost always too good to be true. A sudden feeling of exhilaration or relief means that you have listened to an objection remover. In some cases, the claim is written in black and white and is sitting in plain view on your desk. In other cases, you need to do a little self-analysis and be honest about why you're suddenly feeling so good.

2. Frame the larger problem. Objection removers work because they narrow the problem to one specific pain, which they decisively nail, but they often ignore the larger complexity of the problem or transfer the hard work to another stage of the process. Once you recognize an objection remover, count to 10 before signing the contract and force yourself to think about the larger context of your problem. This is the key step. You'll be shocked by how these claims lose their luster, if only they are placed in the proper context. Let's take a second look at the objection removers listed earlier.

You don't need a data warehouse, you can query the source systems directly. This is an old and venerable objection remover that has been around since the earliest days of data warehousing. Originally, it was easy to disqualify this objection remover because the source transaction systems didn't have the computing capacity to respond to complex end-user queries. But with the recent technical advances in grid computing and in powerful, parallel-processing hardware, a good salesperson can argue that the source systems do have the capacity to do the double duty of processing transactions and serving end-user queries. But in this case there's a larger issue: The data warehouse is the comprehensive historical repository for perhaps your most important company asset—your data. The data warehouse is purpose-built to house, protect and expose your historical data. Certainly these issues have come sharply into focus with the recent emphasis on compliance and business transparency. Transaction processing (legacy) systems are virtually never built with the goal of maintaining an accurate historical perspective on your data. For instance, if the transaction system ever modifies a data element by destructively overwriting it, you've lost historical context. You must have a copy of the transaction system because the historical context is structurally different from the volatile data in a transaction system. Thus, you must have a data warehouse.

You can leave your data in a normalized structure all the way to the end users. The larger issue: Support systems will only work if they seem simple to the end users. Building a simple view is hard work that requires very specific design steps. In many ways, the delivery of data to the end users and their BI tools is analogous to the final delivery made by a restaurant chef from the kitchen through the door to the customers in the dining room. We all recognize the contribution made by the chef in making sure the food is perfect just as it's being taken from the kitchen. The transfer of an (uncooked) complex database schema to end users and their immediate application support specialists is in some ways worse than an objection remover: It's a snare and a delusion. To make this work, all the transformations necessary to create a simple view of the data are still required, but the work has been transferred out of IT to the end user. Finally, if the simple data views are implemented as relational database "views," then the simple queries all devolve into extremely complex SQL that requires an oversized machine to process!

Applications integrators make incompatible legacy systems smoothly function together. The larger issue: Incompatibilities don't come from deficiencies in any technology, but rather from the underlying business practices and business rules that create the incompatible data in the first place. For any application integration technology to work, a lot of hard work must take place up front, defining new business practices and business rules. This hard work is mostly people sitting around a table hammering out those practices and rules, getting executive support for the "business process reengineering" that must be adopted across the organization and then manually creating the database links and transformations that make an integrated system work.

A centralized customer management system makes your customer data issues go away. This is a subtle objection remover that's not as blatant as the first three. Probably every company would benefit from a rational single view of their customers. But only a core part of the customer identity should be centralized. Once a single customer ID has been created and deployed through all customer-facing processes, and selected customer attributes have been standardized, there remain many aspects of the customer that should be collected and maintained by individual systems. In most cases, these systems are located remotely from a centralized site. For example, in a bank, specific behavior scores for credit-card usage are rarely good candidates for transferring to the central customer repository.

You don't need to build aggregates. The larger issue: Data warehouse queries typically summarize large amounts of data, which implies a lot of disk activity. Supporting a data warehouse requires that you constantly monitor patterns of queries and respond with all the technical tools possible to improve performance. The best ways to improve query performance in data warehouse applications, in order of effectiveness, have proven to be 1) clever software techniques building indexes on the data, 2) aggregates and aggregate navigation, 3) large amounts of RAM, 4) fast disk drives and 5) hardware parallelism. Software beats hardware every time. The vendors that talk down aggregates are hardware vendors that want you to improve the performance of slow queries on their massive, expensive hardware. Aggregates, including materialized views, occupy an important place in the overall portfolio of techniques for improving performance of long-running queries. They should be used in combination with all the software and hardware techniques to improve performance.

Leave all your security concerns to the IT security team. The larger issue: Data warehouse security can only be administered by simultaneously being aware of data content and the appropriate user roles entitled to use that data. The only staff who understands both these domains is the data warehouse staff. Controlling data security is a fundamental and unavoidable responsibility of the data warehouse team.

Centralizing all IT functions lets you establish control over the parts of your data warehouse. This objection remover is a cousin of the earlier application integration claim. But this general claim of centralization is more dangerous because it is less specific and therefore harder to measure. Strong centralization has always appealed to the IT mentality, but in the largest organizations, centralizing all IT functions through a single point of control has about as much chance of succeeding as a centrally planned economy. The grand experiment of centrally planned economies in eastern Europe lasted most of the 20th century and was a spectacular failure. The arguments for centralization have a certain consistency, but the problem is that it's too expensive and too time consuming to do fully centralized planning, and these idealistically motivated designs are too insular to be in touch with dynamic, real-world environments. These plans assume perfect information and perfect control, and are too often designs of what we'd like to have, not designs reflecting what we actually have. Every data architect in charge of a monolithic enterprise data model should be forced to do end-user support.

Leave your backup worries behind you. Developing a good backup-and-recovery strategy is a complex task that depends on the content of your data and the scenarios under which you must recover that data from the backup media. There are at least three independent scenarios: 1) immediate restart or resumption of a halted process such as a data warehouse load job, 2) recovery of a dataset from a stable starting point within the past few hours or days, as when a physical storage medium fails, and 3) very long-term recovery of data when the original application or software environment that handles the data may not be available. The larger picture for this objection remover, obviously, is that each of these scenarios is highly dependent on your data content, your technical environment and the legal requirements, such as compliance, that mandate how you maintain "custody" of your data. A good backup strategy requires thoughtful planning and a multipronged approach.

Build your data warehouse in 15 minutes. I've saved the best one for last! The only way you can build a data warehouse in 15 minutes is to narrow the scope of the data warehouse so drastically that there's no extraction, no transformation and no loading of data in a format meant for consumption by your BI tools. In other words, the definition of a 15-minute data warehouse is one that is already present in some form. Too bad this objection remover is so transparent.... It doesn't even offer a temporary feeling of relief.

There's always a letdown after shining a bright light on these objection removers by examining their larger context. Life is complex, after all.

Let's finish our list of four steps:

3. Create the counterargument.Remember, most objection removers aren't patently fraudulent, but by creating a good counterargument, you'll remove the impulse factor and understand the full context of your problem. This is also an interesting point at which you'll see if the salesperson has a textured, reasonable view of a product or service.

4. Make your decision. It's perfectly reasonable to buy a product or service even when you've detected an objection remover. If you've placed it in an objective context or dismissed it altogether yet are still feeling good about the product, then go for it!

Quick Study: Kimball University DW/BI Best Practices

Do you recognize the following "objection removers" designed to relieve your concerns so you'll move ahead with buying a product?

You don't need a data warehouse—now you can query the source systems directly.

You can leave the data in a normalized structure all the way to the end user query tools because our system is so powerful that it easily handles the most complex queries.

Our "applications integrator" makes incompatible legacy systems smoothly function together.

Centralizing your customer management within our system makes your customer matching problems go away and provides one place where all customer information resides.

You don't need to build aggregates to make your data warehouse queries run fast.

Build your data warehouse in 15 minutes.

Startling claims that fly in the face of conventional wisdom and practice are almost always objection removers, so be wary of such claims. Objection removers contain a core claim that has value, but they usually oversimplify or omit the larger context of your concerns. Don't dismiss a product the moment you hear such a claim; the best way to respond is to develop a sound counterargument that clarifies your concerns. If you still feel good about a product, you can move forward knowing you're not making an impulsive decision.

Shortage of mainstream analyzing client tools

Cognos (4 points)

o Users feel confuse what time should use which tools to analyzing cube among Impromptu, Powerplay and Cognos Query

o Analyzing tools limited functions cause that users have to change tools to complete complicated analyzing requirement

o Have to refresh cubes to adapt structure changing

o Cubes will became too big to manage it, need to separate it

o Limited cube size, weak ability to access details data

Hyperion (4.5 points)

o Ondemand Server load balance ability is not strong

o Restriction of network IP redirection issued by Cisco network load balance technique

o C/S analyzing function will retrieve data to desktop, if a query takes time, users have to wait and network pressure, they can not shutdown a time consuming query on interface

Business Objects (3.5 points)

o Not convenient to End-users get start, analyzing interfaces are not easy-understanding

o Unix system support(broadcast Server has no UNIX version …)

o Anomaly warning function absence

o Ability of accessing third-part product cubes on web is absence

o Web report function is limited and need download plug-in for IE on first time viewing(applet or ActiveX)

o Micro-cube technique takes some performance problem to Desktop

o Platform made up of tools set, not component

我们都知道在Oracle中每条SQL语句在执行之前都需要经过解析,这里面又分为软解析和硬解析。那么这两种解析有何不同之处呢?它们又分别是如何进行解析呢?Oracle内部解析的步骤又是如何进行的呢?下面我们就这些话题进行共同探讨。在Oracle中存在两种类型的SQL语句,一类为DDL语句,他们是从来不会共享使用的,也就是每次执行都需要进行硬解析。还有一类就是DML语句,他们会根据情况选择要么进行硬解析,要么进行软解析。在Oracle 8i OCP教材的023中1-12有说明SQL语句的解析步骤,当一条SQL语句从客户端进程传递到服务器端进程后,需要执行如下步骤:• 在共享池中搜索 SQL 语句的现有副本• 验证 SQL 语句的语法是否准确• 执行数据字典查找来验证表和列的定义• 获取对象的分析锁以便在语句的分析过程中对象的定义不会改变• 检查用户访问引用方案对象的权限• 确定语句的最佳执行计划• 将语句和执行计划载入共享的 SQL 区这个先入为主的概念一直占据着我的脑海,我认为硬解析就是上面几个步骤。相对于硬解析,软解析的步骤就是上面第一步找到现有SQL语句的副本后,只需要验证用户是否有权限执行就是了,这样省略上面好几个步骤,相对硬解析来说性能开销就非常小了。即使是在论坛上和大家讨论时,我也一直坚持这个看法。直到前一天看了Tom的《Effective Oracle By Design》中关于语句处理的章节后,我才知道这个自己一直坚持的观点事实上是错误的。事实上,在Oracle中SQL语句的解析步骤如下:

1、 语法检测。判断一条SQL语句的语法是否符合SQL的规范,比如执行:SQL> selet * from emp;我们就可以看出由于Select关键字少了一个“c”,这条语句就无法通过语法检验的步骤了。

2、 语义检查。语法正确的SQL语句在解析的第二个步骤就是判断该SQL语句所访问的表及列是否准确?用户是否有权限访问或更改相应的表或列?比如如下语句:SQL> select * from emp;select * from emp*ERROR at line 1:ORA-00942: table or view does not exist由于查询用户没有可供访问的emp对象,因此该SQL语句无法通过语义检查。

3、 检查共享池中是否有相同的语句存在。假如执行的SQL语句已经在共享池中存在同样的副本,那么该SQL语句将会被软解析,也就是可以重用已解析过的语句的执行计划和优化方案,可以忽略语句解析过程中最耗费资源的步骤,这也是我们为什么一直强调避免硬解析的原因。这个步骤又可以分为两个步骤:

(1、)验证SQL语句是否完全一致。在这个步骤中,Oracle将会对传递进来的SQL语句使用HASH函数运算得出HASH值,再与共享池中现有语句的HASH值进行比较看是否一一对应。现有数据库中SQL语句的HASH值我们可以通过访问v$sql、v$sqlarea、v$sqltext等数据字典中的HASH_VALUE列查询得出。如果SQL语句的HASH值一致,那么ORACLE事实上还需要对SQL语句的语义进行再次检测,以决定是否一致。那么为什么Oracle需要再次对语句文本进行检测呢?不是SQL语句的HASH值已经对应上了?事实上就算是SQL语句的HASH值已经对应上了,并不能说明这两条SQL语句就已经可以共享了。我们首先参考如下一个例子:假如用户A有自己的一张表EMP,他要执行查询语句:select * from emp;用户B也有一张EMP表,同样要查询select * from emp;这样他们两条语句在文本上是一模一样的,他们的HASH值也会一样,但是由于涉及到查询的相关表不一样,他们事实上是无法共享的。假如这时候用户C又要查询同样一条语句,他查询的表为scott下的公有同义词,还有就是SCOTT也查询同样一张自己的表emp,情况会是如何呢?

代码:

SQL> connect a/a

Connected.

SQL> create table emp ( x int );

Table created.

SQL> select * from emp;

no rows selected

SQL> connect b/b

Connected.

SQL> create table emp ( x int );

Table created.

SQL> select * from emp;

no rows selected

SQL> conn scott/tiger

Connected.

SQL> select * from emp;

SQL> conn c/c

Connected.

SQL> select * from emp;

SQL> conn/as sysdba

Connected.

SQL> select address,hash_value, executions, sql_text

2 from v$sql

3 where upper(sql_text) like 'SELECT * FROM EMP%'

4 /

ADDRESS HASH_VALUE EXECUTIONS SQL_TEXT

-------- ---------- ---------- ------------------------

78B89E9C 3011704998 1 select * from emp

78B89E9C 3011704998 1 select * from emp

78B89E9C 3011704998 2 select * from emp

...我们可以看到这四个查询的语句文本和HASH值都是一样的,但是由于查询的对象不同,只有后面两个语句是可以共享的,不同情况的语句还是需要硬解析的。因此在检查共享池共同SQL语句的时候,是需要根据具体情况而定的。我们可以进一步查询v$sql_shared_cursor以得知SQL为何不能共享的原因:

代码:

SQL> select kglhdpar, address,

2 auth_check_mismatch, translation_mismatch

3 from v$sql_shared_cursor

4 where kglhdpar in

5 ( select address

6 from v$sql

7 where upper(sql_text) like 'SELECT * FROM EMP%' )

8 /

KGLHDPAR ADDRESS A T

-------- -------- - -

78B89E9C 786C9D78 N N

78B89E9C 786AC810 Y Y

78B89E9C 786A11A4 Y Y

...TRANSLATION_MISMATCH表示SQL游标涉及到的数据对象是不同的;AUTH_CHECK_MISMATCH表示对同样一条SQL语句转换是不匹配的。

(2、)验证SQL语句执行环境是否相同。比如同样一条SQL语句,一个查询会话加了/*+ first_rows */的HINT,另外一个用户加/*+ all_rows */的HINT,他们就会产生不同的执行计划,尽管他们是查询同样的数据。我们下面就一个实例来说明SQL执行环境对解析的影响,我们通过将会话的workarea_size_policy变更来查看对同样一条SQL语句执行的影响:

代码:

SQL> alter system flush shared_pool;

System altered.

SQL> show parameter workarea_size_policy

NAME TYPE VALUE

------------------------------------ ----------- --------------

workarea_size_policy string AUTO

SQL> select count(*) from t;

COUNT(*)

----------

5736

SQL> alter session set workarea_size_policy=manual;

Session altered.

SQL> select count(*) from t;

COUNT(*)

----------

5736

SQL> select sql_text, child_number, hash_value, address

2 from v$sql

3 where upper(sql_text) = 'SELECT COUNT(*) FROM T'

4 /

SQL_TEXT CHILD_NUMBER HASH_VALUE ADDRESS

------------------------------ ------------ ---------- --------

select count(*) from t 0 2199322426 78717328

select count(*) from t 1 2199322426 78717328

...可以看到由于不同会话workarea_size_policy设置得不同,即使是同样一条SQL语句还是无法共享的。通过进一步查询v$sql_shared_cursor我们可以发现两个会话的优化器环境是不同的:

代码:

SQL> select optimizer_mismatch

2 from v$sql_shared_cursor

3 where kglhdpar in

4 ( select address

5 from v$sql

6 where upper(sql_text) = 'SELECT COUNT(*) FROM T' );

O

-

N

Y

...通过如上三个步骤检查以后,如果SQL语句是一致的,那么就会重用原有SQL语句的执行计划和优化方案,也就是我们通常所说的软解析。如果SQL语句没有找到同样的副本,那么就需要进行硬解析了。

4、 Oracle根据提交的SQL语句再查询相应的数据对象是否有统计信息。如果有统计信息的话,那么CBO将会使用这些统计信息产生所有可能的执行计划(可能多达成千上万个)和相应的Cost,最终选择Cost最低的那个执行计划。如果查询的数据对象无统计信息,则按RBO的默认规则选择相应的执行计划。这个步骤也是解析中最耗费资源的,因此我们应该极力避免硬解析的产生。至此,解析的步骤已经全部完成,Oracle将会根据解析产生的执行计划执行SQL语句和提取相应的数据。